Tartan-Dynamic — 3D Reconstruction Benchmark in Dynamic Scenes

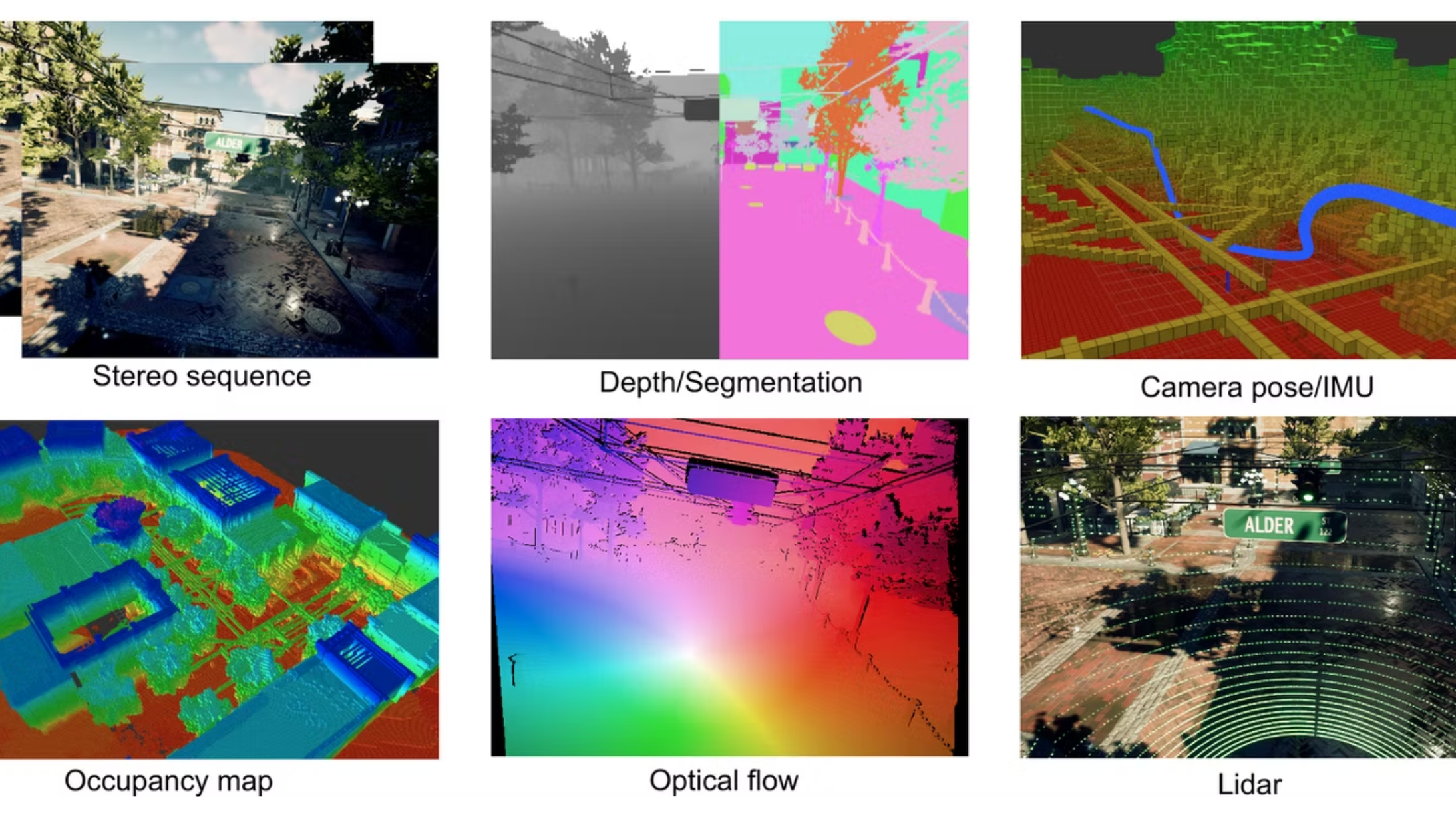

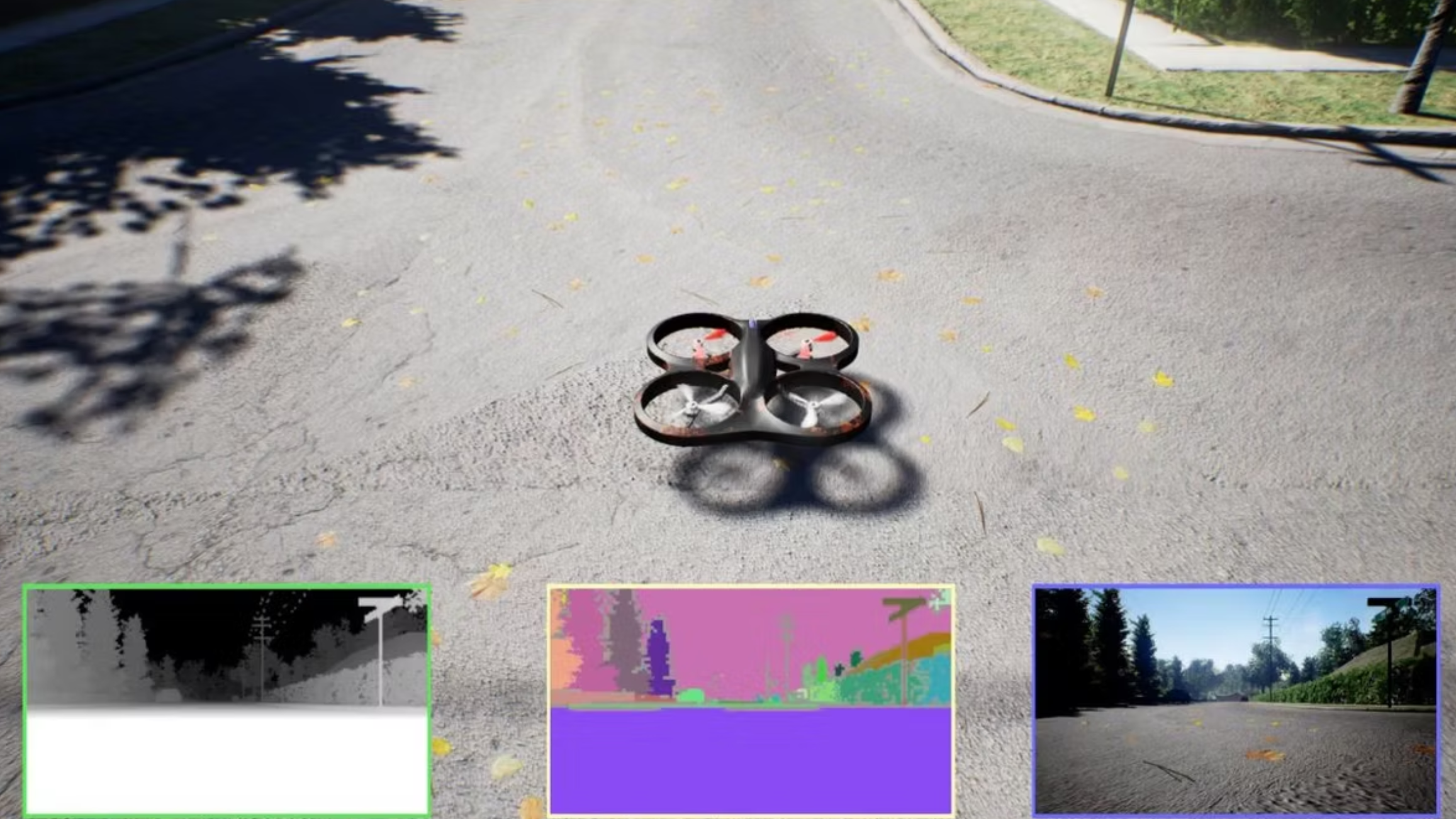

Benchmark pipeline generating dynamic-scene datasets with true novel-view splits, synchronized simulation and planning stack, and reproducible RGB/Depth/LiDAR/pose capture.

Why it matters

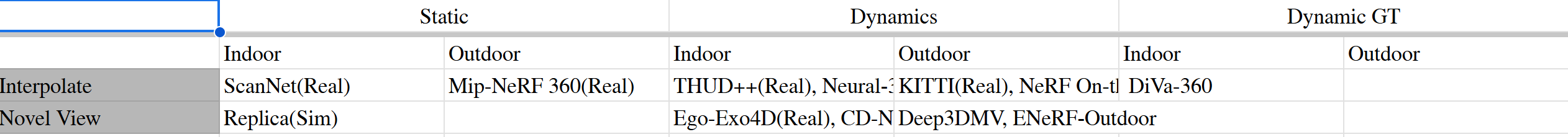

- Interpolation ≠ Generalization: Traditional datasets reuse nearby frames—models succeed without true extrapolation.

- Low dynamic pressure: Most benchmarks feature few moving objects; our set fills the frame with motion.

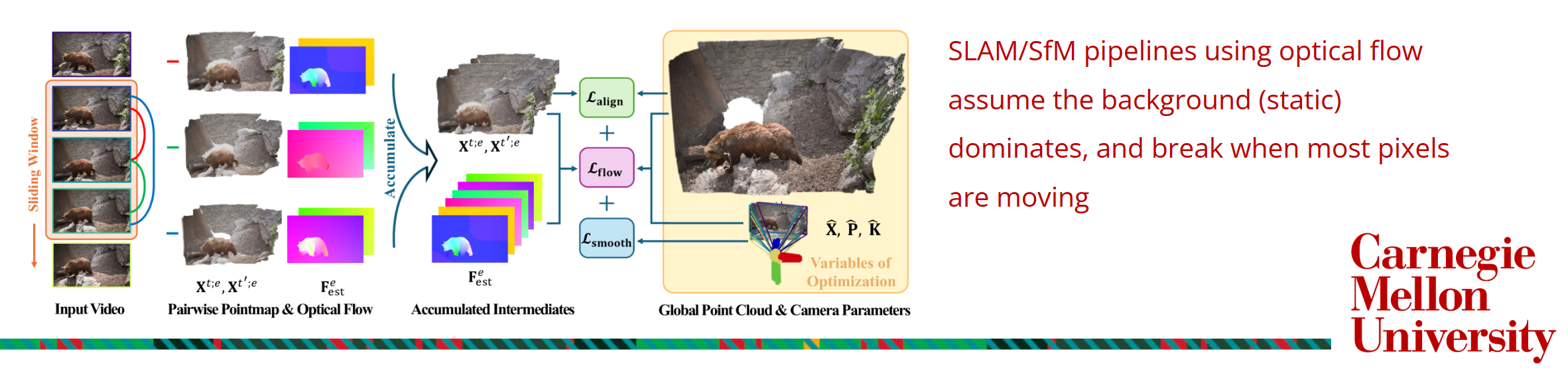

- SLAM/SfM fragility: Static-majority optical flow collapses when motion dominates.

- Tiny coverage: Common sets (< 1 km²) lack the spatial diversity needed for outdoor generalization.

- Tooling gap: Novel-view evaluation across indoor × outdoor × dynamic regimes is rare; Tartan-Dynamic bridges this gap.

My role

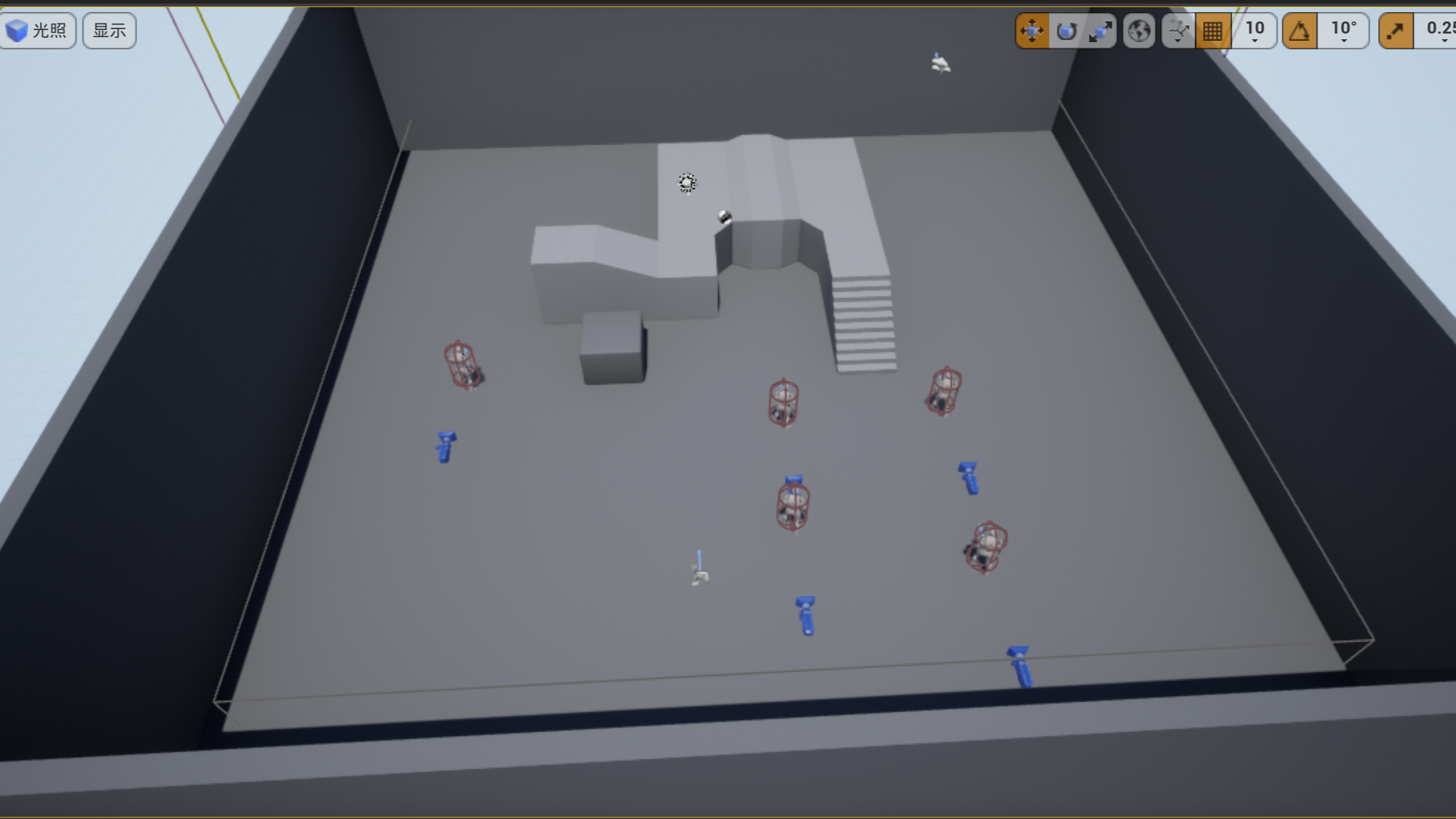

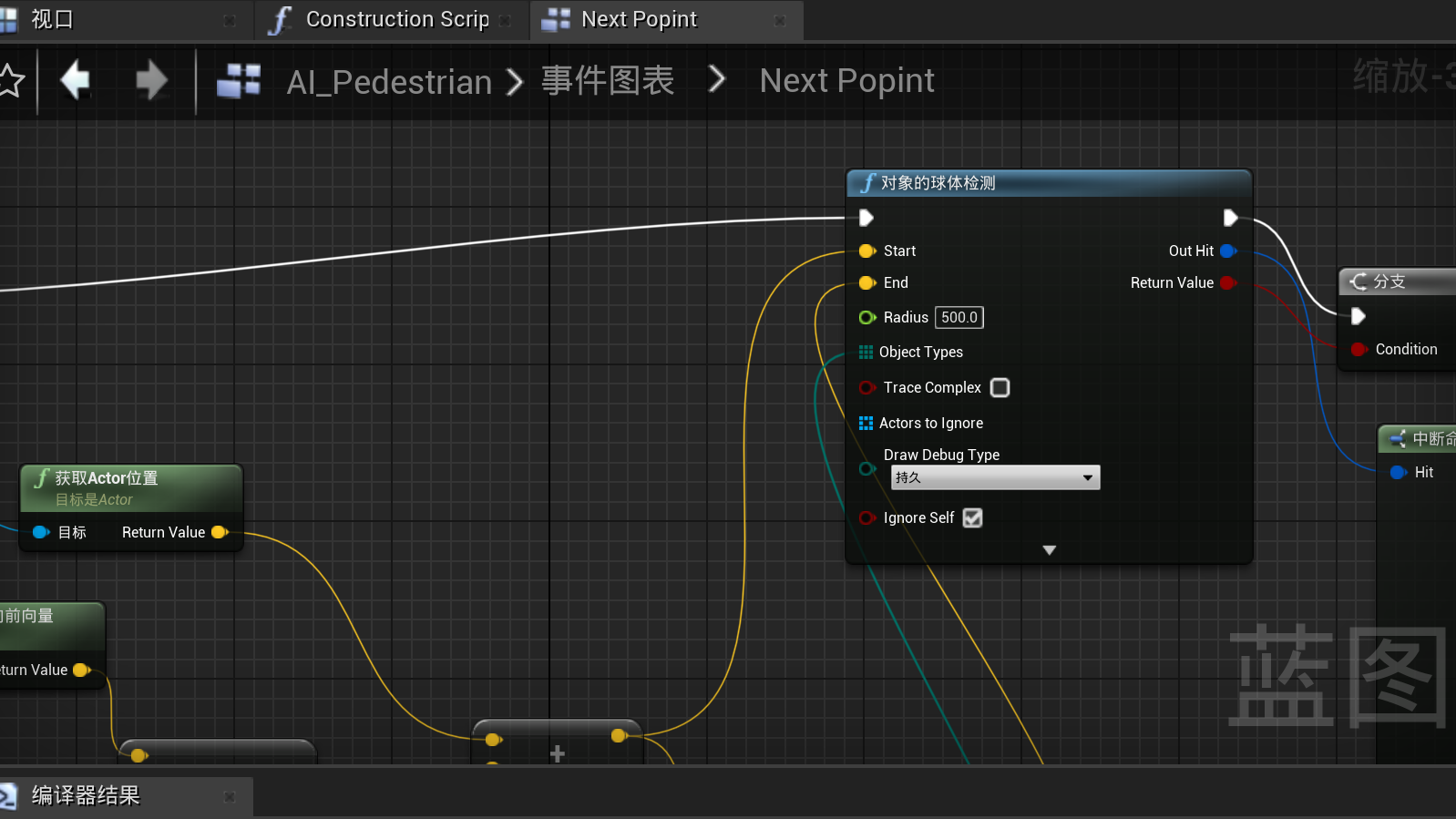

- Designed UE4 + AirSim simulation scenes and drone planner interface.

- Integrated EGO-Planner v2 with AirSim LiDAR via ROS topic relay.

- Implemented covisibility-based novel-view sampler for train/test splitting.

- Automated synchronized multi-sensor capture (RGB, Depth, LiDAR, Pose) for reproducible benchmarking.

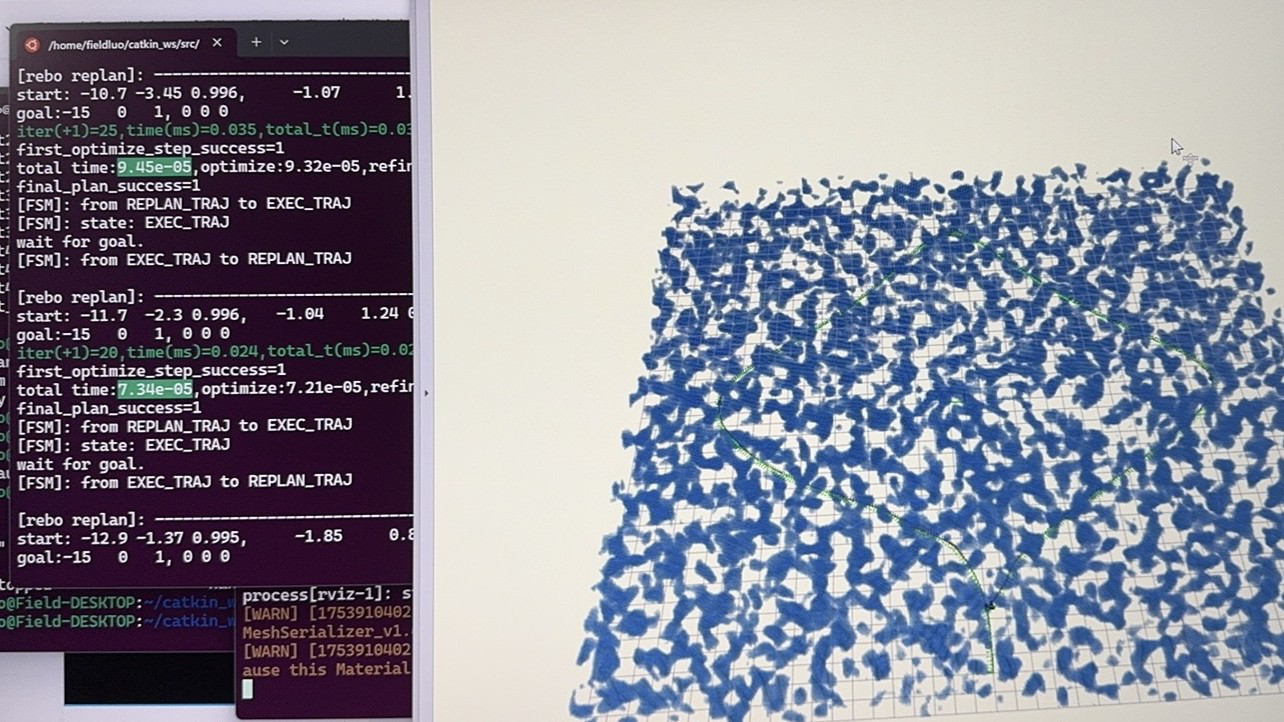

- Visualized trajectory graphs and ESDF voxels in RViz for live debugging.

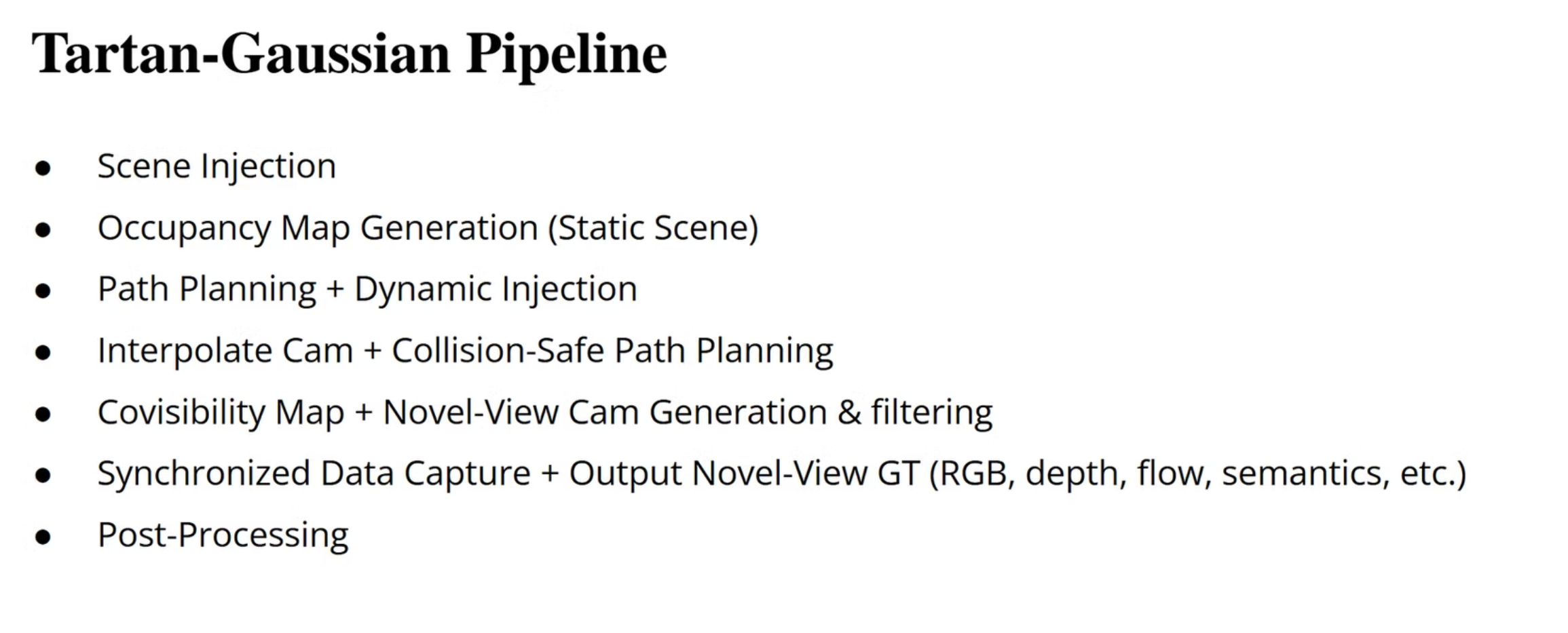

System overview

-

Simulation & Planning:

- UE 4.27 + AirSim generates RGB/Depth/Seg/LiDAR.

-

airsim_ros_pkgsbridges sensor data to ROS topics. - EGO-Planner builds occupancy grids and smooth trajectories.

-

Capture & Evaluation:

- Mapping pass writes Octo/voxel grid for feasible camera space.

- Novel-view sampler filters poses by covisibility test.

- Rendered outputs synchronized and timestamped for training metrics.

Technical Challenges & Solutions

-

Benchmark gaps → Need true novel views

- Challenge: Train/test cameras share paths.

- Solution: Sampled off-trajectory poses filtered by covisibility maps.

- Outcome: Enforced extrapolation—models must reconstruct unseen angles.

-

Too-static scenes → Raise dynamic load

- Challenge: < 50 % moving pixels per frame.

- Solution: Injected multiple scripted actors with stop/start occlusion churn.

- Outcome: Evaluates motion-heavy robustness.

-

Planner bring-up at scale

- Challenge: ROS node timers/topic sync brittle.

- Solution: Unified topic relays, staged node startup, finite timers.

- Outcome: Smooth voxel updates and reproducible B-splines.

-

Interpolation metrics inflated

- Challenge: PSNR/SSIM over near-duplicate frames.

- Solution: Covisibility-masked metric evaluation.

- Outcome: Scores reflect true novel-view reconstruction.

-

Depth + Pose flow limits → Renderer motion vectors

- Challenge: Egoflow mislabels camera vs object motion.

- Solution: Plan UE Velocity/Motion-Vector pass for per-pixel flow GT.

- Outcome: Valid optical-flow supervision even in high motion scenes.

Results

- Working planning stack: AirSim → EGO-Planner pipeline generates smooth, collision-aware trajectories.

- Deterministic bring-up: One-command ROS launch; documented topic relays.

- Reproducible capture: RGB / Depth / LiDAR / Pose synchronized with timestamps & metadata.

- Novel-view readiness: Covisibility-filtered sampling yields non-redundant test sets.

- Dynamic-scene stress tests: Multiple actors and occlusions stress reconstruction methods.

- Flow GT roadmap: Motion-vector export plan underway for per-pixel ground truth.

- Artifacts & manifests: Auto-generated route manifests for quick re-runs and cross-machine portability.